Introduction

This is the summary report of a project funded by the Teaching and Learning Research Initiative (TLRI) between 2006 and 2008. The project researched evidential links between academic development and student outcomes. It focused on the support provided by academic developers in seven New Zealand universities for teachers of first-year papers and the impact this support had on student learning. Academic developers worked collaboratively with teachers to develop Teaching and Learning Enhancement Initiatives (TLEIs) intended to improve student pass rates.

The report uses an orthodox format. It begins by summarising the literature that establishes the research problem and its importance, then summarises the conceptual underpinnings of the project and provides an overview of the steps taken to answer three research questions. Next, it reports the findings related to these questions. The answers were limited due to the complexity of the research problem. This is recognised in the discussion in the fourth section, which discusses complexity theory as well as the results. Finally, the report presents the findings and suggests ways in which the link between academic development and student outcomes might be strengthened.

Literature review

Research on academic development, teaching, learning and student outcomes as discrete topics is extensive. However, there is little research evidence on the direct links between academic development and student outcomes. Prebble et al. (2004), in a literature synthesis commissioned by the New Zealand Ministry of Education, found that there were “very few published studies that were able to draw a strong evidential link between such programmes and students’ study outcomes” (p. vii), and they concluded that only two studies made “a serious attempt” to study the academic development and student learning link. They also noted that only three of over 150 studies reviewed were New Zealand-based.

Prebble et al. are not alone in noting the absence of research linking academic development with learning. Trowler and Bamber (2005), for example, note that:

there is little research which clearly links effective student learning with improvements stemming from lecturer training … This does not mean that courses are ineffectual, simply that significant evidence has not yet been accumulated. (p. 81)

A variety of reasons for this lack have been suggested. Guskey (1997), for example, noted confusion and inconsistency in relation to the criteria used to gauge teacher effectiveness, complicated further by the limited use of student learning as the key impact criterion. He also deemed the prevalent search for main effects to be misguided because it did not take adequate account of subtle variations in the complex interaction of factors associated with any professional development initiatives.

However, research conducted in the school sector (e.g., Elmore, 2002; Garet, Porter, Desimone, Birman, & Yoon, 2001; Ingvarson, Meiers, & Beavis, 2005; Kedzior, 2004; Mundry, 2005; Snow-Renner & Lauer, 2005; Supovitz, 2001) and in the tertiary sector (e.g., Haigh et al., 2006), enable a synthesis of the work done on the relationship between academic development and teaching. Engagement between developer and teacher best occurs on a coherent and continuous basis over an extended period of time, rather than in one-off or disconnected episodes. Work is best organised in iterative cycles, time needs to be provided for teacher learning, practice and investigation, and follow-up support is important.

Development activities should be closely aligned with teachers’ personal goals, interests and needs in relation to immediate curriculum, learning, teaching and assessment issues, and are best woven into the ongoing, daily work of the teacher. Research plays a critical part in this relationship, and indicates the following practice. developers’ interventions are informed by best evidence from research on learning and teaching, and teachers are introduced to this evidence. developers support the teachers’ active inquiries into the questions and issues being addressed and model such inquiry. They are constantly informed during development activities by data about students’ response to teacher initiatives, and also take account of, and align changes with, other initiatives being promoted within the teacher’s work environment. Shulman (1987) adds that during the development process, developers and teachers generate pedagogical content knowledge that blends content and pedagogy into an understanding of the nature of interventions.

Prebble et al. (2004) synthesised five key propositions for academic developers from their research into the nature of academic development interventions.

- Short training courses are unlikely to lead to significant change in teaching behaviour. Such courses tend to be most effective when used to disseminate information about institutional policy and practice, or to train staff in discrete skills and techniques.

- The academic work group is generally an effective setting for developing the complex knowledge, attitudes and skills involved in teaching.

- Teachers can be assisted to improve the quality of their teaching through obtaining feedback, advice and support for their teaching from a colleague or academic development consultant.

- Student evaluations are among the most reliable and accessible indicators of the effectiveness of teaching. When used appropriately, they are likely to lead to significant improvements in the quality of the teaching.

- Teachers’ conceptions about the nature of teaching and learning are the most important influences on how they teach. Intensive and comprehensive staff development programmes can be effective at transforming teachers’ beliefs about teaching and learning and their teaching practice (p. 91).

Although researchers have found ways of describing the relationship between academic developers and teachers, they have said less about how to translate this into learning outcomes. Haigh and Naidoo (2007) indicate that even to connect teaching and learning would be extremely complex. They identify the possible unknowns between teaching and learning in terms of the number of factors that can potentially influence teacher and student learning. Many are not readily recognised or are unpredictable. They lead to complex interactions between teaching and learning, and teachers are not able to control all of them. This means that relationships are probabilistic rather than certain, and a development agenda is necessarily concerned with improving the odds for learning rather than ensuring learning (Haigh and Naidoo, 2007). Evidential links between academic development and teaching have been forged, but similar links between teaching and learning are very complex. Evidential links between academic development and learning outcomes have been assumed rather than established. The research team wished to contribute to filling this gap.

Methodology and methods

Unlocking Student Learning was conceived as a national project involving all eight New Zealand universities, each completing individual case studies investigating the impact of academic development on student learning outcomes. Although all of the universities were involved in proposing and planning the project, Waikato University withdrew due to workload and staffing issues. All other universities completed case studies, the findings from which are synthesised in this national summary report. Individual case studies from five universities follow this overview.

Case study 2: University of Canterbury

Case study 3: Lincoln University

Case study 4: Massey University

Case study 5: Victoria University of Wellington

Purposes and research questions

A range of Teaching and Learning Enhancement Initiatives (TLEIs) to improve student learning outcomes were designed collaboratively by academic developers and course teachers to gauge the impact of these TLEIs on student learning, using pass rates as an outcome measure. The research was designed to address the following research questions:

- How can academic developers and teachers work together to enhance student learning experiences and performance?

- What impact do teaching and learning enhancement initiatives (TLEIs) developed by academic developers and teachers have on students’ learning experiences and achievement in large first-year classes?

- How can the impact of academic development on student learning be determined? (What indicators/ measures can be used to evaluate enhancement of student experience and achievement?)

Conceptual underpinnings

It might be argued that to establish a strong evidential link between academic development and pass rates requires an experimental design, but a number of factors militate against such an argument. As Prebble et al. (2004) observe, this evidential link requires investigation of an indirect “two-step” relationship between teaching and student learning and academic development and teaching. This is a very complex relationship, with numerous variables. Changes in a single variable associated with either of these relationships may affect the results. They also acknowledge that it is difficult to replicate “typical” academic development initiatives in authentic research environments because of their inherent complexity. Also inhibiting an experimental design is the fact that views about the nature of academic development work are diverse and contested, and the history and missions of the eight universities in New Zealand are sufficiently different to make generalisations difficult. Similarly difficult to accommodate in an experimental design are the diverse epistemological assumptions of the different disciplines.

As a consequence, an experimental design was rejected. A multiple case study approach was chosen instead, to enable common elements of the link between academic development and learning outcomes to be identified and synthesised. This approach enables understanding and insights to be gained and tentative conclusions drawn. Case studies are quantitative and/or qualitative studies within a specified and bounded context. They were seen as being particularly useful in this project because they enabled an in-depth examination of different disciplines at each university.

The project was conceived as a series of iterative intervention case studies. Possible interventions (TLEIs) were discussed by the national research team, but the individual interventions used were chosen collaboratively by academic developers and teachers within each case study institution to meet institutional, disciplinary and student needs. TLEIs focused on curriculum design, teaching development, teaching methods and strategies, and/or assessment. To acknowledge the differences between universities, variable time frames for the completion of each TLEI were negotiated, including whether two or three iterations were to be used over the 3 years of the study. While differences between institutions were acknowledged, the TLEIs chosen were informed by research (e.g., Gibbs & Coffey, 2004; Kreber and Brook, 2001; Menges & Austin, 2001).

The results for each TLEI were evaluated using both quantitative and qualitative methods. Because individual case studies used different TLEIs on different groups of students in different subject areas, institutional teams used different data collection strategies and methods, including a common international literature review, student outcome data (such as pass rates), student ratings of the course and its teachers, surveys, interviews, and reflective journals.

Research approaches

Each partner institution selected a large first-year course to participate in the project. Student numbers ranged from 126 to 2,527 in the seven courses. Of the 6,040 students involved, 544 (9 percent of the total enrolled) either withdrew or did not sit the final examination. Subjects ranged across a variety of disciplines, including anthropology, hospitality management, law, computing, economics and physics. The number of academic developers and teachers involved also varied. In the majority of cases, one academic developer was involved in the research, but in two universities there were five. Teacher numbers were similarly varied, from one in the majority of institutions to 17 in one university. The nature of the TLEIs also varied greatly, although themes are evident: technological interventions, consultancies and instructional design changes. Table 1 summarises the institutions, courses chosen, TLEIs and numbers of iterations used.

| University | First-year course | Student no.* | The impact of the TLEI | ADs no. | Teacher no.** | Iterations |

|---|---|---|---|---|---|---|

| Auckland | Anthropology 1 | 775 (643) |

Implementation of i-lectures and podcasting in addition to lectures to support teaching staff and student learning | 1 | 2 | 3 |

| AUT University |

Introduction to Hospitality Management |

207

(191) |

Teacher consultancy (advice, ideas and information) on student retention and success outcomes, curriculum content and assessment) | 5 | 1 | 3 |

| Canterbury | The Legal System

LAWS101 |

1364 (1194) |

Design and implementation of a Blackboard learning module and iterative test to enhance students’ understanding of key concepts | 2 | 1 (9) |

3 |

| Lincoln | Computing

COMP102 |

126 (103) |

Instructional design change in information presentation techniques to enhance student engagement with course content | 1 | 1 (1) |

2 |

| Massey | Principles of Microeconomics

178.101 |

163 (148) |

Instructional design and related aspects/ teaching methods, web support and assessment of student learning | 5 | 1 | 2 |

| Otago | Physics 110 |

2527 (2476) |

Training of laboratory demonstrators to provide effective teaching and learning support for students | 1 | 2 (17) |

3 |

| Victoria University of Wellington | Foundations of Information Systems

INFO101 |

878 (741) |

Tutor training and tutorials on coursespecific learning outcomes | 1 | 6 | 2 |

| Total | 6040 (5493) | 16 | 14 (27) |

* Indicates the total enrolled; the number in brackets is the number who sat the examinations.

** Number of lecturers; the number in brackets includes other lecturers, tutors and/or demonstrators in the course teaching team.

An evaluation of each TLEI was carried out at the end of an iteration, and the results were used to reconsider and/or revise the TLEI on a subsequent iteration. Each team selected evaluative methods from the array agreed by the national project team. Methods selected included:

- surveys of academic developers and teachers to obtain data about, for example, staff conceptions of learning, real vs. perceived barriers to change, attitudes towards academic development provision, gaps in provision, problems with teaching first-year classes

- interviews with teachers, students and academic developers to obtain the data referred to above

- surveys, focus group discussions with teachers and/or students, observation and feedback on teaching in the context of courses, focus group discussions with students, such as small-group instructional diagnoses

- reflective e-journals and diary logs document analysis, including academic development, strategies and interventions/TLEIs; institutional data, e.g. evidence of change in student learning; outcomes indicators, e.g. grades, retention and student evaluation of teaching.

The research team found that identifying links between academic development and student outcomes was a complex business—too complex to allow for a rigorous treatment of all the variables involved. It was decided to focus on student pass rates as one example of student outcomes. data obtained from an examination of pass rates in each institution were aggregated in order to do an ordinary least squares regression analysis of the impact of the TLEIs on student learning. This analysis did not consider variables that may also have had an impact on results. At the institutional case study level, however, some attempts were made to differentiate and trace the impact of a wider range of potential influences on student learning.

Findings were discussed and reviewed in project team workshops, meetings and in the light of studies in the literature. Findings included data from surveys, interviews, focus group discussions, comparative benchmarking and document analyses. The review of the impact of the intervention provided ideas for change and the implementation of new strategies to support teaching and student learning. By the end of iteration 3 there were sufficient data and analysis to document individual case studies, and data and examples of good practice were shared.

Results

In this section we report selected answers to the three research questions guiding the project. In the first instance, the answers are derived from the analyses made of aggregated case study data and insights obtained from the participant teachers and academic developers. There is value in reading the case study reports in conjunction with the results of the project as a whole. Answers to the first question, “How can academic developers and teachers work together to enhance student learning experiences and performance?”, were obtained from surveys completed by academic developers and teachers. Answers to the second question, “What impact do Teaching and Learning Enhancement Initiatives (TLEIs) developed by academic developers and teachers have on students’ learning experiences and achievement in large first-year classes?”, were generated by case study pass rates. The third question, “How can the impact of academic development on student learning be determined?”, was addressed by analysing pass rates using an ordinary least squares regression procedure across the combined institutional data.

1. How can academic developers and teachers work together to enhance student learning experiences and performance?

A number of qualitative surveys were used to assess the viability of the collaborative partnerships between academic developers and teachers implementing the TLEIs. The results are reported from the academic developers’ data, but members of teaching teams tended to mirror these views. The findings largely supported the assumption that collaboration between academic developers and teachers was a valuable first step in establishing a link between academic development, teachers and learning. The results reported here focus on four areas:

- ways of engaging in academic development

- specific insights and benefits

- challenges and obstacles to the relationship

- the research dimension in the academic developer−teacher partnership.

Ways of engaging in academic development

Table 2 summarises some of the main themes identified by academic developers in the survey as being important for building the collaborative relationship.

| Theme | Examples |

|---|---|

| Facilitating |

|

| Advising |

|

| Discussing research |

|

| Contextualising |

|

Specific insights and benefits

From the academic developers’ point of view there were many advantages to working collaboratively with teachers on the research project. Comments were enthusiastically in favour of working with a colleague in a project approach to academic development:

I am now more than ever convinced about the project approach rather than an evaluative feedback approach of the academic development. The project approach allows for better teaching to develop as well as encouraging more reflective teachers.

A number mentioned the advantage of working with actual teacher needs:

Because we started with this real context, real constraints, and real possibilities, the collaboration has felt more genuine, impacting, and potentially longer lasting. This type of collaboration leads to all of the hallmarks of sustainable learning and action: ownership, co-creation, negotiation and contextualization.

Indeed the contextual nature of collaboration was pointed out by a number of respondents:

This project has also reinforced the belief that academic development has to be context embedded … When embedded, it does become a co-production because of the different specialisations of the Ad and the discipline teacher.

Others felt that working collaboratively and directly with departments and facilitating “development” for their needs, and generating ideas directly from their questions, issues and successes, is more effective than generic workshops and that

this form of academic development work can keep an academic developer grounded. For example, contact over time has helped ensure our continuing awareness of [teacher]’s time constraints, the pressures of a full teaching/ marking timetable and the difficulties of putting together a well-taught paper.

Challenges and obstacles to the academic developer−teacher relationship

Although benefits dominated, both the academic developers and the teachers recognised that significant challenges or obstacles could be encountered. Examples of these included:

- having adequate time to do justice to intentions, and being able to arrange mutually convenient times for collaborative work

- ensuring collaborative work is well planned and managed

- allowing for the possibility that, at times, the natural chemistry in the relationship of the academic developer−teacher is not always conducive to this approach

- working with a teacher who is philosophically opposed to the academic developer’s approach

- accepting that research associated with collaborative work may be compromised by the need to give priority to the teacher’s and students’ needs

- being aware of demands on, or expectations of, the teacher from other staff

- meeting the challenges involved in gaining insights into unfamiliar disciplines/professions

- assuring collaborating teachers, colleagues and managers that the work is relevant and paying off, and therefore merits their buy-in and support

- overcoming a lack of resources to support desirable TLEIs, including the availability of other staff with related expertise (e.g., ICT)

- finding solutions to complex problems that may have personal or systemic causes.

The research dimension in the academic developer−teacher partnership

When asked about the place that research/scholarship had in their work in general, and in the context of the project, academic developers agreed that the research dimension was very important:

My own and others’ research informs my practice and my continuing development … it influences the purpose and agenda that I have in mind for academic development work (helping teachers become scholarly and scholars re teaching).

Some went so far as to admit that research “underpins everything I do, in that I attempt to build all my actions as an academic developer on what I know to be good practice from what I’ve read and studied in higher education”. Some even attempted to conduct their own research:

I am attempting to contribute more myself to the New Zealand scholarship of academic development, so that New Zealand academic developers can confidently talk about the New Zealand higher education teaching and learning and academic development environment, not just rely on the research that has been conducted elsewhere.

Others saw themselves more as consumers of research:

I make use of it, but don’t do it as I probably should. I tell teaching colleagues about articles that might inform their work … I promote the carrying out of research by my staff and encourage them to do their work in a scholarly way.

Another noted that “scholarship/reflection is important to informing my practice”. One saw research at the core of the partnership model of staff development: “It creates a common goal. And two people aiming to work in a scholarly way together can encourage and inspire each other rather than just producing the goods alone”.

2. What impact do Teaching and Learning Enhancement Initiatives (TLEIs) developed by academic developers and teachers have on students’ learning experiences and achievement in large first-year classes?

Each case study provided pass rate data for its TLEI. Each university collected data for varying periods, some for offerings of papers before the introduction of the TLEIs. In two cases no pre-TLEI data were collected. The number of iterations for TLEIs also varied. At two universities there were two iterations using the TLEIs, four universities used three iterations and one used six. data show the impact of the TLEI on student pass rates: numbers of students who wrote final examinations, numbers and percentages of students who passed and failed. data exclude students who either withdrew or did not sit the final examination. Although this is one measure, it can be seen as a proxy for showing an evidential link between academic development and learning outcomes.

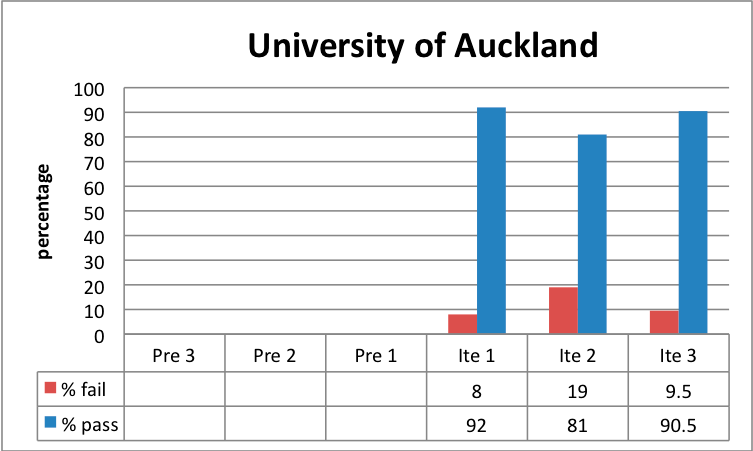

University of Auckland

This project focused on improving the Anthropology 101 course. Traditionally this course has had a high proportion of students not completing, and often poor attendance at lectures. The TLEI involved the introduction of “lectopia” or lecture recording. Table 3 and Figure 1 summarise the results. No pre-intervention data were available.

| University of Auckland | ||||||

| Pre 3 | Pre 2 | Pre 1 | Ite 1 | Ite 2 | Ite 3 | |

| No. sat exam | 234 | 215 | 194 | |||

| No. passed | 215 | 174 | 176 | |||

| No. failed | 19 | 41 | 18 | |||

| Percentage passed | 92 | 81 | 90.5 | |||

| Percentage failed | 8 | 19 | 9.5 | |||

Figure 1: University of Auckland pass rates over three iterations

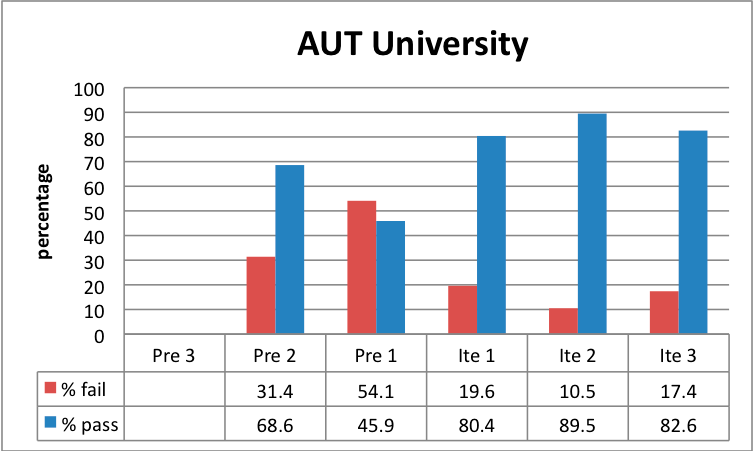

AUT University

Various TLEIs were planned and implemented in Introduction to Hospitality and Management in the 3-year period. The focus was on an overall improvement in students’ learning performance and aspects of their experience of learning. In the first phase (2005−2006), changes to learning outcomes were made to improve the validity and value of the students’ learning. In the second phase (2007−2008) the TLEIs had mainly, but not exclusively, a language-related focus. Table 4 and Figure 2 provide overviews.

| AUT University | ||||||

| Pre 3 | Pre 2 | Pre 1 | Ite 1 | Ite 2 | Ite 3 | |

| No. sat exam | 61 | 94 | 48 | 54 | 89 | |

| No. passed | 42 | 43 | 39 | 48 | 74 | |

| No. failed | 19 | 51 | 9 | 6 | 15 | |

| Percentage passed | 68.6 | 45.9 | 80.4 | 89.5 | 82 | |

| Percentage failed | 31.4 | 54.1 | 19.6 | 10.5 | 17.4 | |

Figure 2. AUT pass rates over three iterations, with two pre-TLEI results

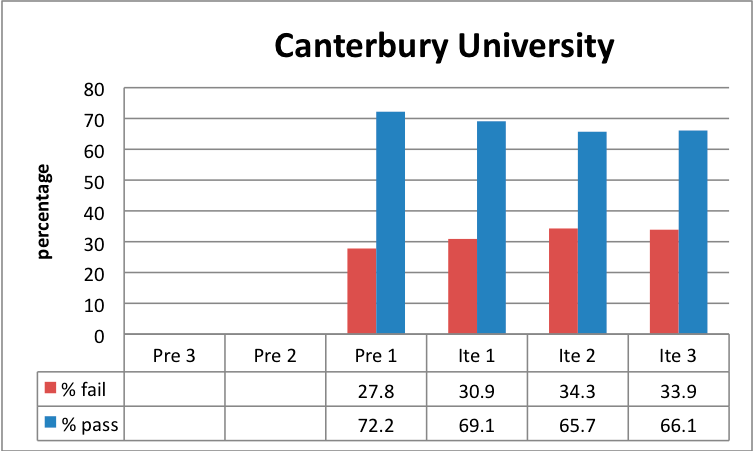

University of Canterbury

The TLEI was designed and implemented in LAWS101: Legal System, focusing on statutory interpretation, an aspect of the course that students consistently found challenging. A Blackboard learning module for statutory interpretation was collaboratively developed. Table 5 and Figure 3 summarise pass rates.

| Canterbury University | ||||||

| Pre 3 | Pre 2 | Pre 1 | Ite 1 | Ite 2 | Ite 3 | |

| No. sat exam | 378 | 382 | 403 | 409 | ||

| No. passed | 312 | 300 | 286 | 327 | ||

| No. failed | 120 | 134 | 149 | 168 | ||

| Percentage passed | 72.2 | 69.1 | 65.7 | 66.1 | ||

| Percentage failed | 27.8 | 30.9 | 34.3 | 33.9 | ||

Figure 3. Canterbury pass rates over three iterations, with one pre-TLEI result

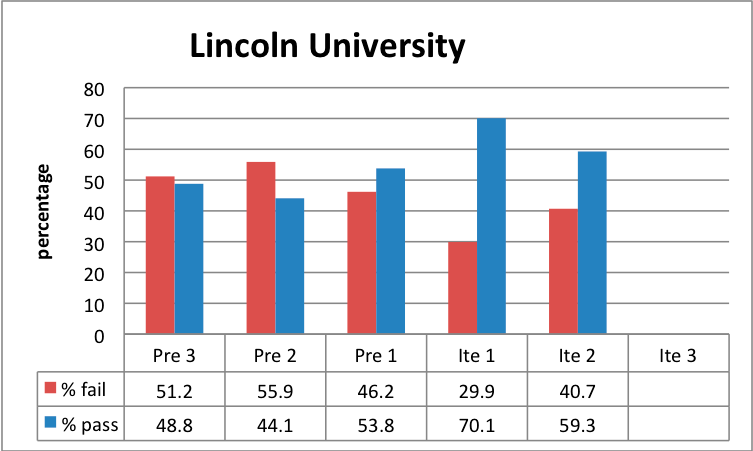

Lincoln University

The intervention at Lincoln University focused on teacher professional development and its impact on student learning, as measured by student results, to effect change in first-year student learning. The change in presentation of the course (the TLEI) was a refinement of PowerPoint presentations informed by discussions between lecturer and adviser. Table 6 and Figure 4 tell the story.

| Lincoln University | ||||||

| Pre 3 | Pre 2 | Pre 1 | Ite 1 | Ite 2 | Ite 3 | |

| no. sat exam | 102 | 93 | 77 | 56 | 47 | |

| no. passed | 50 | 41 | 41 | 39 | 28 | |

| no. failed | 52 | 52 | 36 | 17 | 19 | |

| Percentage passed | 48.8 | 44.1 | 53.8 | 70.1 | 59.3 | |

| Percentage failed | 51.2 | 55.9 | 46.2 | 29.9 | 40.7 | |

Figure 4. Lincoln University pass rates over two iterations, with three sets of pre-TLEI results

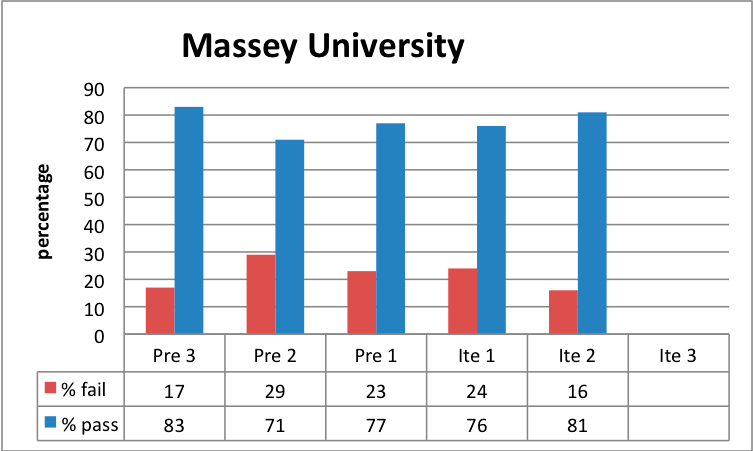

Massey University

At Massey the focus of the intervention was on the impact of instructional design on teaching methods and the effectiveness of instructional design approaches for promoting learning in the Principles of Microeconomics paper. The intention was to improve both completion and achievement levels. See Table 7 and Figure 5 for details.

| Massey University | ||||||

| Pre 3 | Pre 2 | Pre 1 | Ite 1 | Ite 2 | Ite 3 | |

| no. sat exam | 153 | 134 | 96 | 68 | 80 | |

| no. passed | 127 | 95 | 74 | 52 | 67 | |

| no. failed | 26 | 39 | 22 | 16 | 13 | |

| Percentage passed | 83 | 71 | 77 | 76 | 81 | |

| Percentage failed | 17 | 29 | 23 | 24 | 16 | |

Figure 5. Massey pass rates over two iterations, with three sets of pre-TLEI results

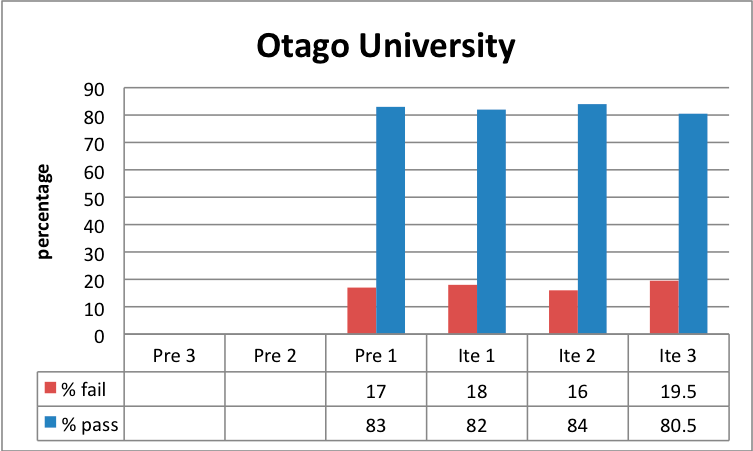

Otago University

For practical reasons the laboratory component of PHSI 110/131 was targeted in the TLEI project. Staff saw a need to improve the relevance of the physics laboratory programme for health science students. The assumption was that if students understood the relevance of the physics content to their intended careers, they would be motivated to develop a deeper understanding of physics and scientific method. Table 8 and Figure 6 provide an overview.

| Otago University | ||||||

| Pre 3 | Pre 2 | Pre 1 | Ite 1 | Ite 2 | Ite 3 | |

| No. sat exam | 1117 | 1069 | 1317 | 92 | ||

| No. passed | 927 | 877 | 1106 | 74 | ||

| No. failed | 190 | 192 | 211 | 18 | ||

| Percentage passed | 83 | 82 | 84 | 80.5 | ||

| Percentage failed | 17 | 18 | 16 | 19.5 | ||

Figure 6. Otago pass rates over three iterations, with one set of pre-TLEI results

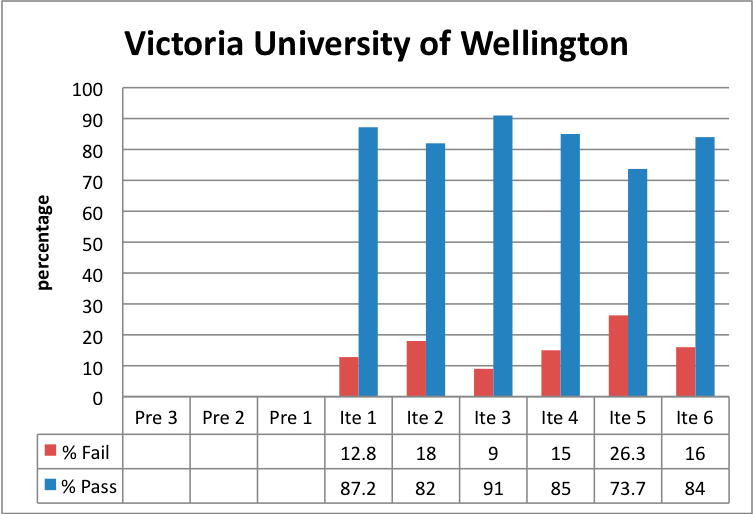

Victoria University of Wellington

The focus of the project team was to encourage a team-based approach to the development and teaching of INFO102. The team members wanted to find out whether effective, sustainable, systemic changes to teaching and administrative practices would have a bearing on students’ learning. The VUW case study outlines a collaborative team approach to teaching and learning in INFO102. Table 9 and Figure 7 provide the details of the results.

| Victoria University of Wellington | |||||||||

| Pre 3 | Pre 2 | Pre 1 | Ite 1 | Ite 2 | Ite 3 | Ite 3 | Ite 3 | Ite 3 | |

| No. sat exam | 258 | 50 | 199 | 43 | 159 | 32 | |||

| No. passed | 225 | 41 | 181 | 37 | 117 | 27 | |||

| No. failed | 33 | 9 | 18 | 6 | 42 | 5 | |||

| Percentage passed | 87.2 | 82 | 91 | 85 | 73.7 | 84 | |||

| Percentage failed | 12.8 | 18 | 9 | 15 | 26.3 | 16 | |||

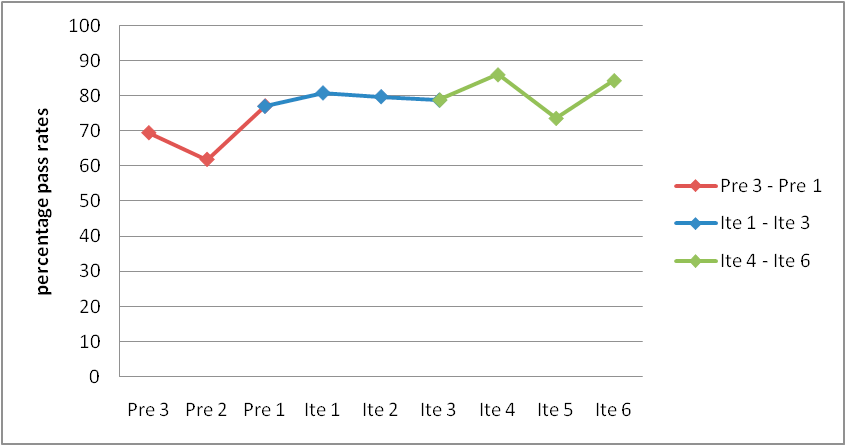

Figure 7. Victoria University of Wellington pass rates over six iterations

3. How can the impact of academic development on student learning be determined? (What indicators/measures can be used to evaluate enhancement of student experience and achievement)?

Composite institutional results were analysed statistically to determine whether the interventions designed collaboratively by academic developers and teachers affected the overall averaged pass rates. This was conceived as an indicator of whether the interventions used offered any evidential links between academic development and learning outcomes.

Student pass rates improved. The composite average student pass rates of results recorded before TLEIs was 66.38 percent. After implementation of the first TLEI (Ite 1) the composite average student pass rate increased to 79.5 percent (an increase of 13.12 percent). For iteration 2, the results decreased slightly to 77.5 percent, before increasing again to 82.14 percent after iteration 3: an increase of 4.64 percent. In summary, there was an increase of 15.76 percent in student average pass rates from before the introduction of TLEIs to the completion of the third iteration. Figure 8 graphs this result.

Figure 8. Overall universities’ pass rates

The pass rates from before the introduction of the TLEI to the completion of iteration 3 show a positive correlation coefficient of .88, calculated using linear regression, with one variable being the number of iterations (three pre- and three post-TLEI), and the second variable being the average data for each university, as determined by the student pass averages for the number of iterations (in two universities there were two iterations and in four others there were three). Victoria University included results from six post-TLEI iterations, but only the first three were included in the analysis here.

To evaluate whether the project as a whole had a positive impact on pass rates in the participating universities, a simple ordinary least squares (OLS) regression model was estimated. This model is detailed below:

PASS_RATE = β0 + β1AUC + β2AUT + β3CAN + β4LIN + β5OTA + β6VIC + β7POST_INT + ε (1)

where:

- PASS_RATE is the pass rate expressed (in percentage) out of 100

- AUC is dummy variable denoting the University of Auckland’s observations (equals 1 if observation is for University of Auckland, zero otherwise)

- AUT is a dummy variable denoting AUT University’s observations

- CAN is a dummy variable denoting Canterbury University’s observations

- LIN is a dummy variable denoting Lincoln University’s observations

- OTA is a dummy variable denoting Otago University’s observations

- VIC is a dummy variable denoting Victoria University’s observations

- POST_INT is a dummy variable denoting whether the observation is pre- or post-intervention (equals 1 if the observation is in the post-intervention period, zero otherwise)

- ε is the equation error

- β0 to β7 are coefficients to be estimated.

Summary statistics for each of the variables used in this analysis are shown in Table 10.

| Variable | Mean | Median | Minimum | Maximum | SD |

| PASS_RATE | 75.0031 | 80.4500 | 44.1000 | 92.0000 | 13.0773 |

| AUC | 0.0937500 | 0.000000 | 0.000000 | 1.00000 | 0.296145 |

| AUT | 0.156250 | 0.000000 | 0.000000 | 1.00000 | 0.368902 |

| CAN | 0.125000 | 0.000000 | 0.000000 | 1.00000 | 0.336011 |

| LIN | 0.156250 | 0.000000 | 0.000000 | 1.00000 | 0.368902 |

| OTA | 0.125000 | 0.000000 | 0.000000 | 1.00000 | 0.336011 |

| VIC | 0.187500 | 0.000000 | 0.000000 | 1.00000 | 0.396558 |

| POST_INT | 0.687500 | 1.00000 | 0.000000 | 1.00000 | 0.470929 |

The dependent variable is the pass rate for a particular (pre- or post-intervention) iteration of a course. The differences between pass rates due to institution-specific factors are controlled for with the institution-specific dummy variables. Massey University is the control institution, hence there is no Massey-specific dummy variable. The variable of interest in this model is POST_INT, which denotes whether an observed pass rate is a postintervention pass rate. The model is estimated using 32 observations. The parameter estimates are shown in Table 11, with the dependent variable being the pass rate.

| COEFFICIENT | |

| Const | 73.80* |

| (3.775) | |

| AUC | 4.526 |

| (6.099) | |

| AUT | −6.102 |

| (5.012) | |

| CAN | −12.65* |

| (5.403) | |

| LIN | −22.38* |

| (4.964) | |

| OTA | 1.446 |

| (5.403) | |

| VIC | 0.5096 |

| (5.190) | |

| POST_INT | 9.512* |

| (3.475) | |

| n | 32 |

| R2 | 0.7212 |

Note: Standard errors are given in parentheses.

* Indicates significance at the 5 percent level.

The R2 indicates that the estimated model explains 72 percent of the variation in pass rates. The coefficients on the individual institutions tell us the position of the pass rate levels relative to the control institution. The coefficient of interest, POST_INT, is positive and statistically significant[1]. The coefficient suggests that postintervention pass rates were approximately 9.5 percent greater than pre-intervention pass rates. A 95 percent confidence interval of the POST_INT coefficient ranges from a low of 2.3 percent to a high of 16.7 percent. This simple model suggests that the interventions (TLEIs) had a statistically significant positive effect on the pass rates of almost 10 percent in the participating universities.

Discussion

Qualitative data gathered for this project suggest that the academic developer−teacher collaboration used was a positive platform for introducing interventions. Quantitative data show that for each case study there was some improvement in pass rates as a result of implementing a TLEI. When the movement of pass rates for individual case studies was averaged, a simple ordinary least squares regression model revealed that the interventions had a significant impact on student outcomes in the form of improved overall pass rates. The temptation to conclude that there is an evidential link between academic development and student learning is strong.

However, although the study does show such a link, it is a very limited one. Improvements on one variable can show that a TLEI leads to improvements on that variable only. This, however, does not make a convincing case for a general finding that academic development leads to better student learning and outcomes. This section first offers a summary of the evidence gained about the academic development−learning outcome link against each of the research questions. It concludes by considering the complexity involved in establishing a general link.

How can academic developers and teachers work together to enhance student learning experiences and performance?

There were fewer data on teachers’ views about the success of the collaborative model for improving student outcomes than were available for academic developers, but overall they agreed with developers that collaboration offered a useful and practical approach to academic development. Project work was seen by academic developers as superior to workshops and other teaching, a view confirmed by Lee (1997). This enabled academic developers to contextualise their interventions in terms of the teachers’ and students’ lived work reality. A number of academic developers observed that this grounded them better than other forms of academic development. Equally important was that collaborative project work focused on research: the research dimension was central to the project and sat at the core of partnership development.

Although the partnership model was widely praised for its important contribution to the development and implementation of TLEIs, it could not contribute evidentially to improvements in student outcomes other than pass rates. Similar conclusions were drawn by McAlpine, Oviedo and Emrick (2008), reflecting their research into the relationship between workshops facilitated by academic developers and course design and student learning. While concluding that the project “enabled us to document some links between the workshops and student experiences of learning”, they also acknowledged that “the study does not meet the rigorous standards expected of traditional research designs” (p. 671). Even with this reservation, the partnership model seemed to provide an important foundation for the project in that it enabled the TLEIs to be both academically sound and practically relevant.

What impact do Teaching and Learning Enhancement Initiatives (TLEIs) developed by academic developers and teachers have on students’ learning experiences and achievement in large first-year classes?

The institutional contexts at the various universities differed, as did the large first-year courses selected and the number of TLEIs developed, implemented and researched. It is no surprise, then, to find that the results achieved by the interventions varied among the institutions. As a result it is difficult to estimate the comparative level of success of each intervention, particularly as in some cases TLEIs were tweaked during the life of the project. In institutions where pre-TLEI data were available, the greatest improvement occurred most frequently after the second iteration of the TLEI, although in two such institutions the gains were after the first iteration. In the two institutions where no pre-TLEI data were available, the biggest gains were achieved after the first and third iteration. In one case (AUT), the changes in pass rates were statistically significant; in others they were more appropriately attributable to chance.

These data are interesting but do not show a consistent link between academic development input and pass rates, let alone provide persuasive evidence for a link between academic development and student outcomes more generally. What the evidence does suggest is that academic developers working collaboratively with teachers may exercise some influence on pass rates. While the analysis of pass rates for individual institutions indicates fluctuations and in many cases no sustained increase or decrease, these fluctuations may well be due to student-, teacher- and institutional-specific factors outside the scope of the TLEI’s. The strength of the regression analysis is that institutional–specific factors are controlled for within the model, and it is found that pass rates are almost 10 percent higher post-intervention. This is compelling evidence to suggest that there was a clear, positive impact as a result of the TLEIs.

There is also qualitative evidence that the case studies had an impact on teaching and learning in these institutions. The evidence supports Bamber’s (2008) suggestion that

The answer to the question of what can be learned from small-scale, local studies, therefore, is that evaluation can be aligned with its context, and can provide insight into the longer-term local impact of a programme. This can provide rich data, framed within a theoretical understanding, which is shared with and understood by everyone involved, avoiding causal simplicity. (p. 111)

In one case the project led to the creation of a senior-level institutional committee for the purpose of enhancing communication between IT services and academic staff. Lecturer development was evident in a number of cases. In one, the project created a ripple effect on the teaching practices of the lecturer, such that he has now identified other areas for personalising learning using a partnership model. One case study noted the introduction of innovations. Student feedback was particularly affirming in another case. Students rated the paper as more engaging, interesting and enjoyable than other papers they were taking at the time. One teacher’s reflections also suggest that the assistance she was given by the academic developer provided encouragement for her ongoing commitment to improving her teaching and student learning.

How can the impact of academic development on student learning be determined? (What indicators/measures can be used to evaluate enhancement of student experience and achievement)?

Having just noted that the case studies were developed, conducted and researched differently, it seems somewhat ingenuous to combine average results in order to make statements about average pass rate trends for all universities. Is this a case of combining apples, pears and strawberries?

The issue is not so much one of aggregating case study results, which has been done successfully (Kuh, Kinzie, Schuh, Whitt, & Associates, 2005; Zepke, Leach, & Butler, 2009), but of combining results derived from different instruments and applications. True, the details of each case study were different, but all were developed within a common conceptual framework arrived at in numerous meetings. As a result, there were many comparable elements.

Another issue is the extent to which the results can be attributed to the participation of academic developers. Clearly complete attribution is untenable. Academic developers were involved and, according to surveys and interviews did have an impact on the results, even if these were not quantifiable. despite such conceptual difficulties, it is worthwhile to look at the overall trends of the project. It was decided that the combined results would address the third research question, “How can the impact of academic development on student learning be determined?”, and provide some insights about a linear relationship between the TLEIs and student pass rates. What the simple ordinary least squares regression model could not do was provide a more convincing evidential link between academic staff development and student outcomes. Nevertheless, these results do provide an indicative measure of the impact of the interventions on pass rates as one measure of student outcomes (a significantly positive effect of almost 10 percent). Without question, pass rates improved considerably: by over 15 percent from the time TLEIs were introduced to the time the third (or second) iteration was completed as evident in the overall universities’ pass rates.

It is widely agreed that the indirect two-step link between academic development and teaching, and teaching and student outcomes, is difficult to establish (Prebble et al., 2004). Findings from this project, among others (e.g., Garet et al., 2001; Ingvarson et al., 2005) suggest a positive link between academic development and teaching. Other projects demonstrate links between teaching and student outcomes. For example, CorneliusWhite’s (2007) meta-analysis showed teacher behaviours could improve student outcomes, and Hattie’s (2009,

p. 22) meta-analysis on student achievement concluded that “The major message is simple—what teachers do matters”. However, in this study, as in others, the two-step link from academic development to teaching, and from teaching to student outcomes, has been difficult to establish. It would even be difficult to design experimental studies that controlled for all the variables in this complex relationship.

The complexity factor

This project confirms the finding by Prebble et al. (2004) that no strong evidential link has been established between the work of academic developers and student outcomes. McAlpine et al. (2008) summarise the reasons for this:

While the literature strongly recommends conducting studies linking academic development activities to student learning, there is inherent difficulty in carrying them out. It involves tracking impact on lecturer thinking, through impact of that thinking on their teaching actions to impact on students they work with, and ideally comparison with a control group. Further, even if one can create these conditions, many factors can influence the findings, for instance, personal (e.g., lack of skill to carry out the desired change), organisational (e.g., policies that create large classes, lack of funding for Teaching Assistants), and methodological (e.g., instruments not sensitive enough, individuals unwilling to participate). Reconciling these issues is an extremely difficult task. (p. 671)

McAlpine et al. suggest that the links between academic developers and student outcomes are complex. Such complex relationships are probably more easily theorised than delineated. Complexity theory adapts the metaphor of a network to help trace the complex relationships between phenomena such as academic development, teaching, learning and outcomes. Mcdaniel and Jordan (2009) discuss some features of such a network. They suggest that complexity thinking focuses on the interdependence of connection and distinction, not on linearity and hierarchy, for creating emergent order in networked systems. Academic development and student outcomes join together in a relational network as well as being in their own separate networks made up of, say, academic development strategies and learning.

Davis and Sumara (2008) describe the architecture of complex networks as a hairball, but then tease out this metaphor by referring to complex networks as collections of nodes (academic development strategies) clustered into larger nodes (academic development, teaching and learning), which are clustered into yet larger nodes (the classroom). They argue that nodes are not “basic units”, but each should be understood as a network in its own right, linked to other node networks in what they call hubs (academic development strategies + teaching + learning + environment +++). In one sense, each node is connected with every other node in the network through a relatively small number of connections. In complexity thinking, such relationships are dynamic and non-hierarchical, and are not easily captured. Most nodes and hubs interact with their closest neighbours.

This idea of a dynamic and non-hierarchical network in complexity thinking seems a good fit for the overlapping relationships between academic development and student outcomes. Complexity thinking helps to understand why the direct evidential link between them is not easily established. Tosey (2002) provides a useful summary:

Complexity theory is often heralded as something new. But maybe we all, already, have a tacit awareness of the paradoxical nature of systems. It is just, perhaps, that we do not take this awareness as seriously as we might, and we only use it in certain contexts. For example, as educators we already recognize that we cannot control or determine (many forms of) learning; that students are essentially self-organizing; that (much) learning is emergent and constructed—and often the most valuable learning is like this. We also believe that our educational relationship to students is highly influential—we do not stand outside their learning. We recognize the paradox that if we focus on learning (product) that can be ‘engineered’ we may limit the educational experience. Many of us believe that the best we can do—and that what we should do as professionals—is to create conditions under which learning is likely to emerge. This implies working at the edge of chaos. (p. 2)

Conclusions and possibilities for improving practice

Valuable answers to each of the research questions were generated, but the overall intention of establishing a substantial general link between academic development and student outcomes was not achieved. Nevertheless, the project can report a number of potentially useful findings.

- There is a link between academic development and student pass rates, although academic development is not the sole reason for the improvement of pass rates. Other reasons, not researched in this study, also contribute.

- The introduction of TLEIs led to an improvement in overall universities’ pass rates (Figure 8). As relates to the impact on pass rates in each case study, the TLEIs led to pass rates improvement in six of the seven universities. However, improvements were not evenly distributed across case studies or iterations. It is not possible to claim that the intervention alone was responsible for moving pass rate outcomes.

- The partnership model used to develop TLEIs worked well. Although it is not possible to estimate how far the partnerships contributed to improving pass rates, they were judged to be successful innovations by academic developers and teachers in terms of using a research approach to develop, implement and evaluate TLEIs.

- The questions investigated in the project were always seen as complex, and complexity theory offers an explanation of why and how this is so. A reading of complexity theory suggests that the quest for establishing direct evidence-based links between academic development and student outcomes is unlikely to succeed.

What do these findings offer for improving practice both in academic development and teaching while pursuing improved student outcomes? The following possibilities are worth considering.

- Adopt an academic development model that is focused on academic developers and teachers working collaboratively on projects.

- Increase the proportion of academic development activity on improving outcomes to one course at a time. A reduction in workshop activity may result.

- Select interventions that will improve specific outcomes for specific courses. For example, courses with retention problems should focus on improving retention, and those with widespread disengagement should focus on improving engagement.

- develop interventions that are based on research to develop the scholarship of teaching among teachers.

Footnote

- This result suggests that there is only a 5% chance that this result would arise in a random distribution, and thus indicates that the effect is significantly different from zero. ↑

References

Bamber, V. (2008). Evaluating lecturer development programmes: Received wisdom or self-knowledge? International Journal for Academic Development, 13(2), 107−116.

Cornelius-White, J. (2007). “Learner-Centered Teacher-Student Relationships Are Effective: A Meta-Analysis”. Review of educational research (0034-6543), 77 (1), p. 113.

Davis, B., & Sumara, d. (2008). Complexity and education: Inquiries into learning, teaching and research. New York, NY: Routledge.

Elmore, R. (2002). Bridging the gap between standards and achievement: The imperative for professional development in education. Washington, dC: Albert Shankar Institute.

Garet, M., Porter, A., desimone, L., Birman, B., & Yoon, K. (2001). What makes professional development effective?: Results from a national study of teachers. American Educational Research Journal, 38(4), 915−945.

Gibbs, G., & Coffey, M. (2004). The impact of training of university teachers on their teaching skills, their approach to teaching and the approach to learning of their students. Active Learning, 5(1), 87−100.

Guskey, T. (1997). Research needs to link professional development and student learning. Journal of Staff Development, 18(2), 36−40.

Haigh, N. J., & Naidoo, K. (2007). Investigating the academic development and student learning relationship: Challenges and options. Paper presented at the annual conference of the International Society of the Scholarship of Teaching and Learning, Sydney, Australia.

Haigh, N., Neil, L., Kirkness, A., Parker, L., Lester, J., Gossman, P., et al. (2006). Unlocking student learning: The impact of Teaching and Learning Enhancement Initiatives (TLEIs) on first-year university students. Paper presented at the New Zealand Association of Research in Education national conference, Rotorua.

Hattie, J. (2009). Visible Learning. A Synthesis of Over 800 Meta-Analyses Relating to Achievement. Routledge. Published 18th November 2008 by Routledge

Ingvarson, L., Meiers, M., & Beavis, A. (2005). Factors affecting the impact of professional development programs on teachers’ knowledge, practice, student outcomes and efficacy. Education Policy Analysis Archives, 13(10). Retrieved 16 March 2006 from http://epaa.asu.edu/ epaa/v13n10/

Kedzior, M. (2004). Teacher professional development. Education Policy Brief, 15, 1-6.

Kreber, C., & Brook, P. (2001). Impact evaluation of educational development programmes. International Journal of Academic Development, 6(2), 96−102.

Kuh, G., Kinzie, J., Schuh, J., Whitt, E., & Associates. (2005). Student success in college: Creating conditions that matter. San Francisco, CA: Jossey-Bass.

Lee, A. (1997). Working together? Academic literacies, co-production and professional partnerships. Literacy and Numeracy Studies, 7(2), 65−82.

McAlpine, L., Oviedo, G., & Emrick, A. (2008). Telling the second half of the story: Linking academic development to student learning. Assessment and Evaluation in Higher Education, 33(6), 661−673.

Mcdaniel, R. Jr., & Jordan, M. (2009). Complexity and postmodern theory. In J. Johnson (Ed.), Health organizations: Theory, behavior, and development (pp. 63−84). Sudbury, MA: Jones & Bartlett Publishers.

Menges, R., & Austin, A. (2001). Teaching in higher education. In V. Richardson (Ed.), Handbook of research on teaching (4th ed., pp. 1122−1156). Washington, dC: American Educational Research Association.

Mundry, S. (2005). What experience has taught us about professional development. Retrieved 16 March 2006 from http://www.edvantia.org/publications/pdf/MS2005.pdf

Prebble, T., Hargraves, H., Leach, L., Naidoo K., Suddaby, G., & Zepke, N. (2004). Impact of student support services and academic development programmes on student outcomes in undergraduate tertiary study: A synthesis of research. Retrieved 4 November 2005 from http://educationcounts.edcentre.govt.nz/publications/downloads/ugradstudentoutcomes.pdf

Shulman, L. S. (1987). Knowledge and teaching: Foundations of the new reform. Harvard Educational Review, 57(1), 1−22.

Snow-Renner, R., & Lauer, P. (2005). McREL insights: Professional development analysis. Retrieved 16 March 2006 from http://www.mcrel.org/topics/Professionaldevelopment/products/234/

Supovitz, J. (2001). Translating teaching practice into improved student achievement. In S. Fuhrman (Ed.), From the capitol to the classroom: Standards based reforms in the states (pp. 81−98). Chicago, IL: University of Chicago Press.

Tosey, P. (2002). Complexity theory: A perspective on education. Retrieved 15 March 2008 from http://www.palatine.ac.uk/files/1042.pdf

Trowler, P., & Bamber, R. (2005). Compulsory higher education teacher training: Joined-up policies, institutional architectures and enhancement cultures. International Journal for Academic Development, 10(2), 79−93.

Zepke, N., Leach, L., & Butler, P. (2009). Engaging tertiary students: The role of teacher student transactions. New Zealand Journal of Educational Studies, 44(1), 69−82.